It’s 3 a.m. You’re lying comfortably in bed when suddenly your phone starts screeching. It’s an automated high-severity alert telling you that your company’s web application is down. Exhausted, you open the website on your phone and do some basic tests. Everything looks ok at first, but then you realize customers can’t log into their accounts or make purchases. What happened, and what does this mean for your application?

The critical path is the set of components and workflows in an application that is required for the application to serve its core function. If any of these components fails, so does our ability to serve customers and generate revenue for the business. The application might continue to work to some capacity, but not in a way that supports the business. For an e-commerce shop, this is allowing customers to make purchases. For an online bank, this is checking account balances and transferring money.

For many teams, understanding the critical path is harder than it sounds. Modern applications are complex systems consisting of tens of thousands of individual components, ephemeral resources, and shifting dependencies. It’s hard enough to identify which of these are essential to your application, let alone ensure that they’re always available. However, if we don’t focus our reliability efforts on our critical path, the hypothetical situation we presented at the start of this article will become more of the norm.

In this article, we’ll explain what makes up the critical path and why it’s so important to your reliability initiative.

Finding the critical path through application mapping

To build resilience into our critical path, we need to identify the individual components and interdependencies that make up this path. Application mapping is already difficult due to the dynamic nature of modern applications. Components change due to automated orchestration tools like Kubernetes, engineers constantly deploy new features and bug fixes, and services scale in real-time in response to user traffic. The problem is even more pronounced when moving from on-premises to cloud services since we no longer have the same degree of visibility into the systems hosting our applications.

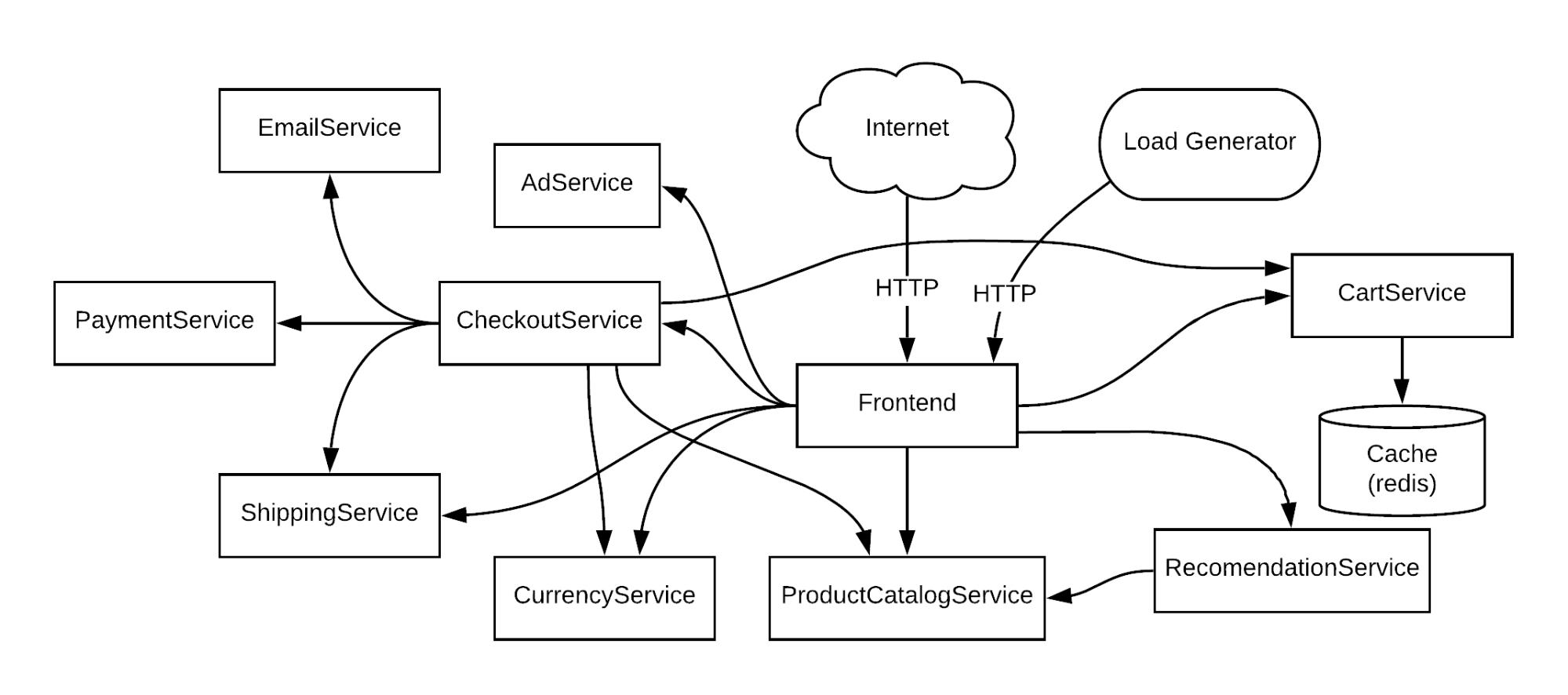

However, we can still use application mapping and dependency mapping. As an example, let’s look at an e-commerce website called Online Boutique. This is a microservice-based online store consisting of ten services. Each service provides specific functionality such as hosting the webserver, processing payments, serving ads, and fulfilling orders. If we look at the application map, we see each service and its interdependencies. Mapping out the application this way clearly shows us the individual services, our application dependencies, and the potential failure points between services.

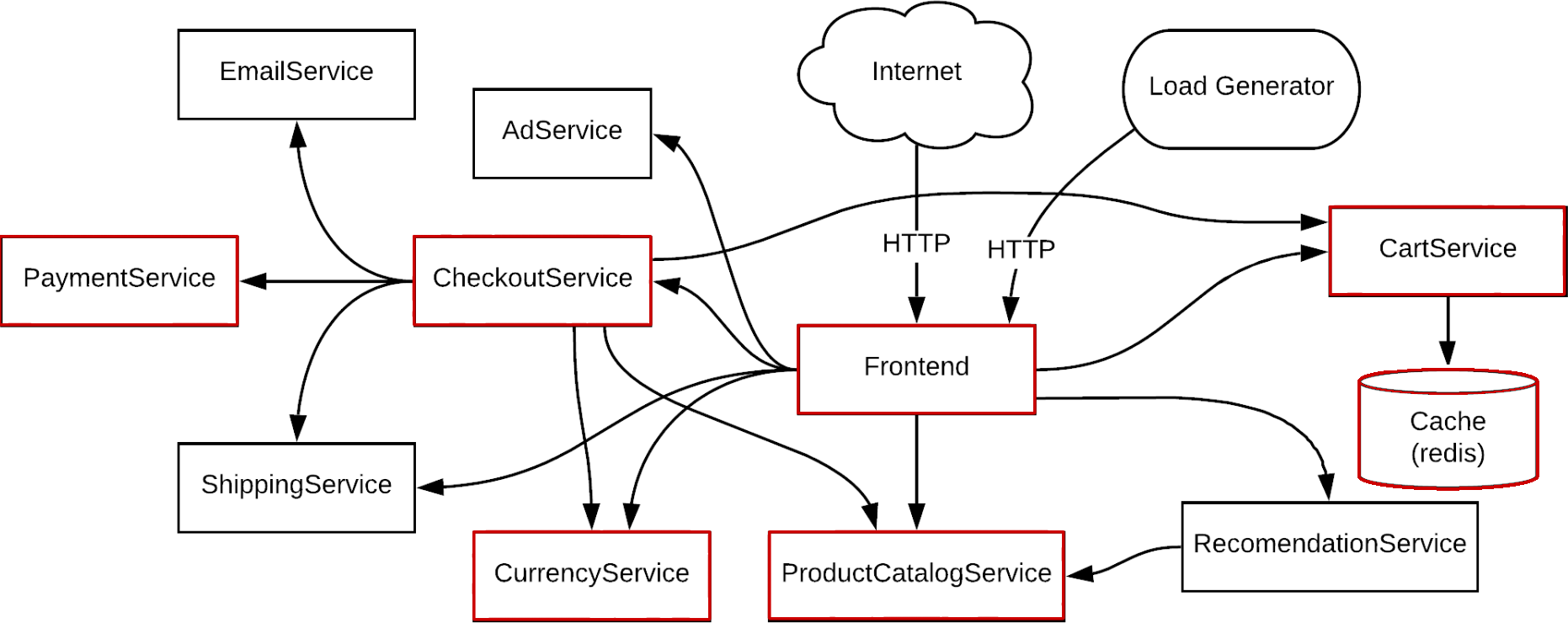

But which of these are required for us to serve customers? As an e-commerce shop, our core workflow is allowing customers to place orders. If we highlight the services that must be operational for this to happen, we end up with the following application map:

This is our critical path. Services like the Frontend, ProductCatalogService, and PaymentService are highlighted because they enable the web application, product browsing, and payment processing respectively. Advertisements, recommendations, and emails help drive sales and improve the customer experience, but their failure won’t impact end-users in a significant way. They’re value-added services and important to have, but customers will be more upset if they can’t checkout versus not seeing ads or recommendations.

Of course, software is more than just applications and services. It’s also the underlying infrastructure and virtualization layers, the tools we use to deploy and orchestrate services, and the cloud applications and cloud platforms we integrate with. For example, we can deploy our application with zero bugs, but it means little if we have unstable network connections, hardware performance issues, misconfigured Linux servers, or outages in the AWS, Azure, or Google Cloud Platform data centers where our applications are hosted. We want to make sure that we’re prepared for as many different failure modes as possible across both our application and infrastructure, and this means taking a proactive approach to reliability.

How Chaos Engineering helps make your critical path more resilient

As engineers, it's our responsibility to build applications that can withstand and recover from failures. Outages cost our company in revenue, customer goodwill, and brand recognition. For an e-commerce shop, this could mean thousands of dollars lost every minute.

To avoid this, we need to find and mitigate failure modes along the critical path as early in the development process as possible. We do this by using Chaos Engineering, which is the science of performing intentional experimentation on a system by deliberately injecting measured amounts of harm and observing how it responds for the purpose of improving its resilience. By uncovering and addressing failure modes along our critical path, we can prevent costly production outages while creating a more reliable foundation for our application.

To demonstrate this, we’ll run a chaos experiment using Gremlin, an enterprise Saas Chaos Engineering platform.

Example: Testing our critical path using Chaos Engineering

With Chaos Engineering, we can proactively test for failure modes along our critical path and find problems before they become 3 a.m. pages.

For example, let’s look at Online Boutique’s CartService. This is a .NET application that stores user shopping cart data. According to our application map, CartService uses Redis for data collection and caching. We know that losing our CartService means losing an essential part of our checkout process, but do we know what happens when Redis fails? Can users still perform actions such as browsing products and accessing their accounts, or does the entire website fail?

We’ll test this by designing a chaos experiment, which has four components:

- A hypothesis, which is what we expect to happen as a result of the experiment.

- An attack, which is the actual process of injecting harm. This includes the blast radius (the number of components impacted by the attack) and the magnitude (the scale or intensity of the attack).

- Abort conditions, which signal us to stop the experiment to prevent any unintended damage.

- A conclusion based on observations made during the attack.

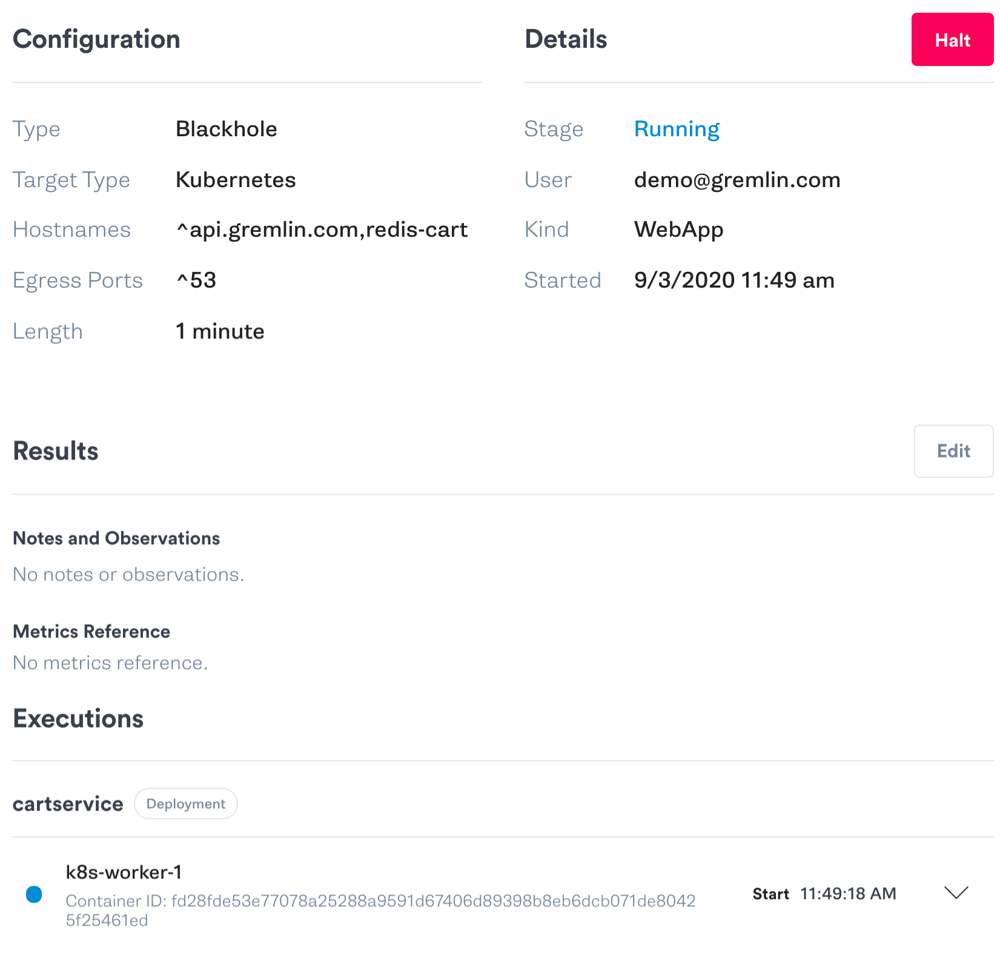

Our hypothesis is this: if Redis is unavailable, customers will still be able to use the website, but without being able to add items to their cart or view items they’ve already added. We’ll perform the test by running a blackhole attack on the Kubernetes Pod where CartService is running, specifically blocking network traffic to and from Redis. We’ll then open our website in a browser and try going through the checkout process like an end-user. We’ll abort the test if our website times out or displays an error message.

Here is the attack running in the Gremlin web app:

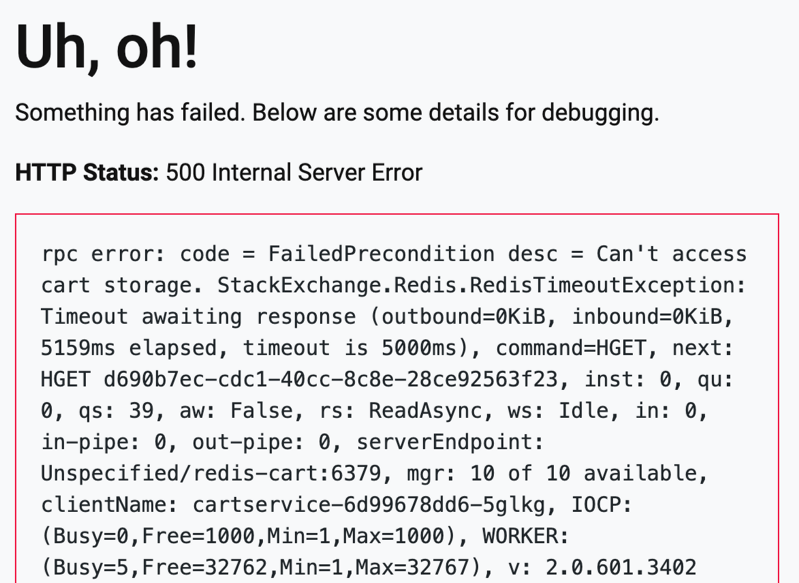

Now if we refresh the page, we see an Internal Server Error message caused by a connection timeout in CartService. This tells us that Redis is part of our critical path, and that any Redis outage will result in this full-page error message being shown to customers. This isn’t just bad customer experience, it’s a security risk.

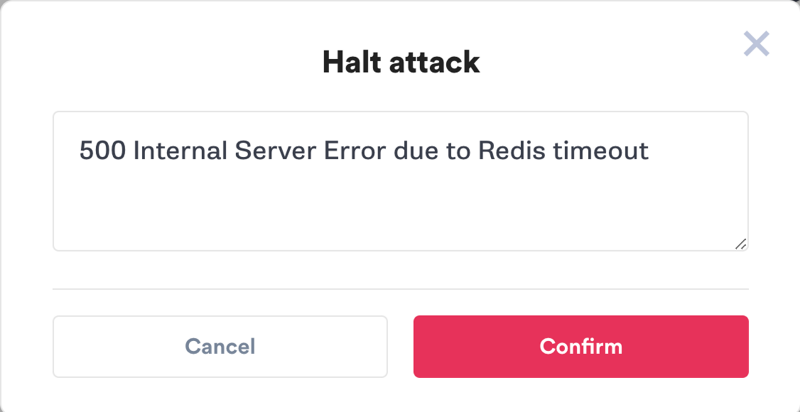

Since we met our abort conditions, let’s stop the test by clicking the “Halt” button in the Gremlin web app. We’ll record our observations in the text box. After a few seconds, the attack will abort and network traffic will return to normal.

We can take these insights back to engineering and start implementing solutions. First, we should display a more user-friendly message to users while recording important diagnostic data to our logging solution. We should also look into ways of making Redis more reliable, perhaps by scaling up our deployment and replicating the database across multiple nodes or even multiple data centers. This will reduce the risk of a sudden outage and lower our chances of being woken up at 3 a.m.

Conclusion

Having perfectly reliable applications is the goal of any engineering team, but the reality is reliability is an incremental process. We can’t solve all of our reliability problems at once, but we can start by building resilience in the systems that the business depends on. This includes hidden and transitive dependencies that we might not normally consider, as we saw with CartService and Redis.

By focusing our reliability efforts on our critical path, we can reduce the risk of outages taking down our core operations, while also creating a solid foundation for the rest of our application.